Projection Matrix In Linear Regression

See Section 5 Multiple Linear Regression of Derivations of the Least Squares Equations for Four Models for technical details. P 2 I n X X X 1 X.

Explained Sums Of Squares In Matrix Notation Cross Validated

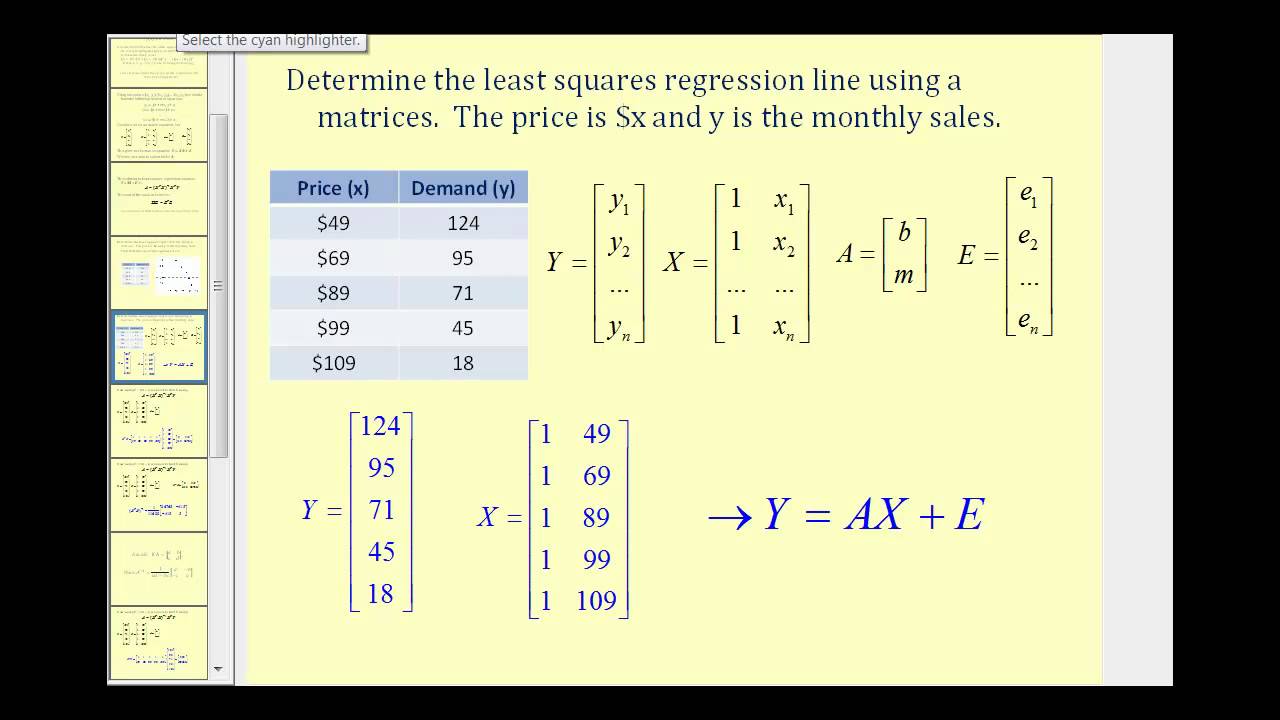

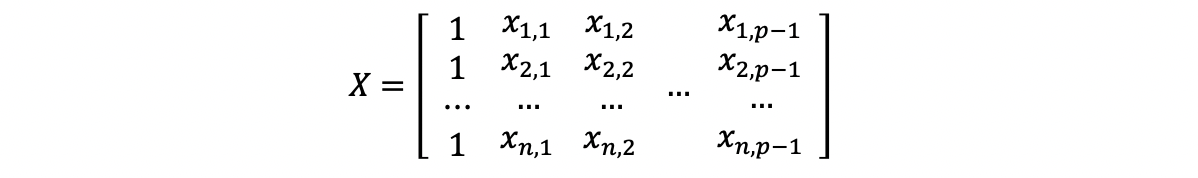

We will consider the linear regression model in matrix form.

Projection matrix in linear regression. Linear regression is a simple algebraic tool which attempts to find the best generally straight line fitting 2 or more attributes with one attribute simple linear regression or a combination of several multiple linear regression being used to predict another the class attribute. First and most simply the projection matrix is square. In linear algebra and functional analysis a projection is a linear transformation P displaystyle P from a vector space to itself such that P 2 P displaystyle P2P.

Projection matrices are also symmetric ie. Since projection matrices are always positive semidefinite the diagonals of mathbfP satisfy p_ii geq 0. I understand that the trace of the projection matrix also known as the hat matrix XInvXXX in linear regression is equal to the rank of X.

Linear regression is commonly used to fit a line to a collection of data. In fact you can show that since mathbfP is symmetric and idempotent it satisfies 0 leq p_ii leq 1 Then h_ii geq 1n as needed. Matrix Inverses in the Regression Context Matrix Inverses An n nmatrix Ais called invertible if there exists an n nmatrix Bsuch that AB BA I n Bis called the inverse of Aand is typically denoted by B A 1.

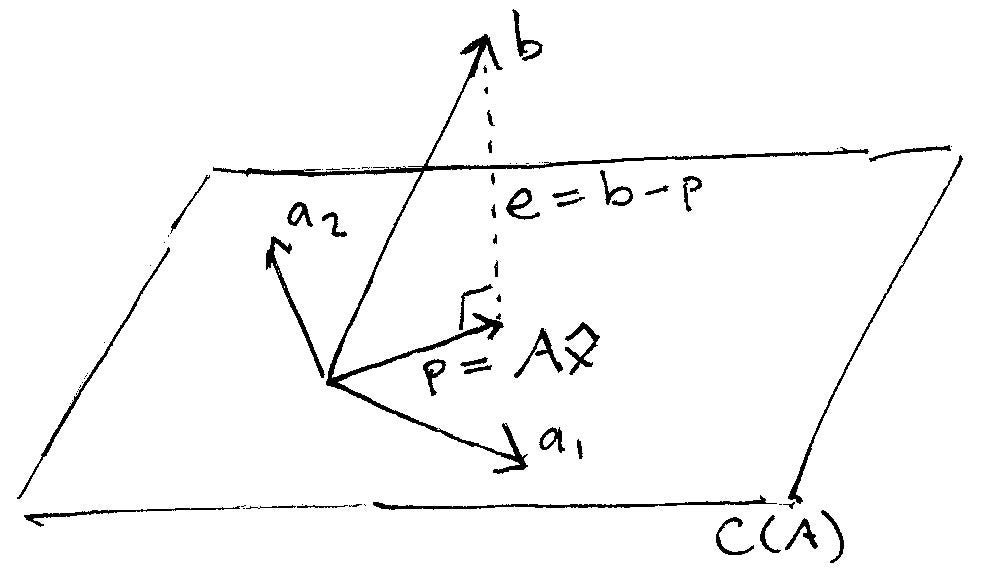

If b is perpendicular to the column space then its in the left nullspace NAT of A and Pb 0. Projection matrices and least squares Projections Last lecture we learned that P AAT A 1 AT is the matrix that projects a vector b onto the space spanned by the columns of A. Equation 25 underlies another meaning of the work linear in linear re-gression.

In most cases we also assume that this population is normally distributed. The predicted value of Y at any new point x 0 with features z 0 x 0 is also linear in Y. For a given model with independent variables and a dependent variable the hat matrix is.

Matrices - Proof that trace of hat matrix in linear regression is rank of X - Mathematics Stack Exchange. For simple linear regression meaning one predictor the model is Yi β0 β1 xi εi for i 1 2 3 n This model includes the assumption that the εi s are a sample from a population with mean zero and standard deviation σ. Though abstract this definition of projection formalizes and.

One important matrix that appears in many formulas is the so-called hat matrix H XXX-1X since it puts the hat on Y. This means that performing a linear regression is like finding the orthogonal projection of a target vector onto a subspace that is formed by each of the functions we want to include in the regression. Further Matrix Results for Multiple Linear Regression.

It leaves its image unchanged. P X X X 1 X. - Mathematics Stack Exchange.

The estimated coe cient is a xed linear combination of Y meaning that we get it by multiplying Y by the matrix Z 0Z 1Z. Where vvT is the n nproject matrix or projection operator for that line. When an idempotent matrix H also satisfies HHt symmetric then it is called an orthogonal projection matrix.

So mathbfP is also a projection matrix. Matrix Form of Regression Model Finding the Least Squares Estimator. Note inverses only exist for square matrices with non-zero determinants Example A a b c d A 1 dad bc bad bc cad bc aad bc.

Matrix notation applies to other regression topics including fitted values residuals sums of squares and inferences about regression parameters. Is a projection matrix. The geometric meaning of idempotency here is that once weve projected u on to the line projecting its image on to the same line doesnt change anything.

Linear algebra provides a powerful and efficient description of linear regression in terms of the matrix A T A. In this case point 42 was. So h_ii p_ii c_ii p_ii 1n.

The model for mu Ey_ijbeginequation mu_i beta_0 beta_1 x_i - hatxendequationhas linear predictorbeginequation X beta beginbmatrix 1. If b is in the column space then b Ax for some x and Pb b. P P P P.

The hat matrix in regression is just another name for the projection matrix. Inverse function - Projection matrix for a linear regression model with low rank. Since v is a unit vector vTv 1 and vvTvvT vvT 41 so the projection operator for the line is idempotent.

It is z 0 0 ZZ 1Z0Y. The method of least squares can be viewed as finding the projection of a vector. Since v v is of some arbitrary dimensions n k n k its transpose is of dimensions kn k n.

But in linear model course a projection matrix is defined in another way. A set of training instances is used to compute the linear model with one attribute or a set of attributes. That is whenever P displaystyle P is applied twice to any value it gives the same result as if it were applied once.

Y X β X X X 1 X Y P Y. Frank Wood fwoodstatcolumbiaedu Linear Regression Models Lecture 11 Slide 20 Hat Matrix Puts hat on Y We can also directly express the fitted values in terms of only the X and Y matrices and we can further define H the hat matrix The hat matrix plans an important role in diagnostics for regression analysis. Write H on board.

If you prefer you can read Appendix B. By linear algebra the shape of the full matrix is therefore nn n n ie. For any matrix X XXtX-1Xt is an orthogonal projection and it projects a vector into range space column space of X.

Chapter 3 Linear Projection 10 Fundamental Theorems For Econometrics

Chapter 3 Linear Projection 10 Fundamental Theorems For Econometrics

Perform Linear Regression Using Matrices Youtube

Introduction To The Hat Matrix In Regression Youtube

Hat Matrix And Leverages In Classical Multiple Regression Cross Validated

Projection Matrices Ols Youtube

How Is It The Hat Matrix Spans The Column Space Of X Really Nice Youtube

Chapter 3 Linear Projection 10 Fundamental Theorems For Econometrics

The Linear Algebra View Of Least Squares Regression By Andrew Chamberlain Ph D Medium

What Is The Importance Of Hat Matrix H X X Top X 1 X Top In Linear Regression Cross Validated

Linear Regression 5 Mlr Hat Matrix And Mlr Ols Evaluation By Adam Edelweiss Serenefield Medium

Hat Matrix And Leverages In Classical Multiple Regression Cross Validated

Why Linear Regression Is A Projection By Vladimir Mikulik Medium

Posting Komentar untuk "Projection Matrix In Linear Regression"